One of the challenges of virtual reality is locomotion. In room-scale VR users can walk around, but limited space is a problem. Mobile VR users with only three degrees of freedom have to rely on head movement or controllers.

Teleportation is a fun way to get around the limitations of VR. Although not truly natural, users are accustomed to the concept of teleportation from sci-fi and fantasy, and it is not hard to implement.

We will be building a scene for Oculus Go/Gear VR that demonstrates teleportation using the controller as a pointer.

Source Code

The Unity project for this tutorial is available for download here. To save space, Oculus Utilities and VR Sample Scenes are not included, but the source files that were modified are included in the top level of the project. These files are OVRPlugin.cs, VREyeRaycaster.cs, and Reticle.cs.

Setup

To get started, create a new Unity project, Teleporter. Create the folder Assets/Plugins/Android/assets within the project, and copy your phone’s Oculus signature file there. See https://dashboard.oculus.com/tools/osig-generator/ to create a new signature file.

Select File -> Build Settings from the menu and choose Android as the platform. Click on Player Settings to load PlayerSettings in Unity inspector. Add Oculus under Virtual Reality SDK’s and select API Level 19 as the Minimum API Level.

Download Oculus Utilities from https://developer.oculus.com/downloads/package/oculus-utilities-for-unity-5/ and extract the zip file on your computer. Import Oculus Utilities into your project by selecting Assets -> Import Package -> Custom Package within Unity and choosing the location of the package you extracted. If you are prompted to update the package, follow the prompts to install the update and restart Unity.

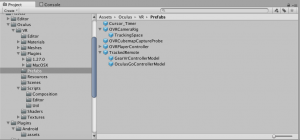

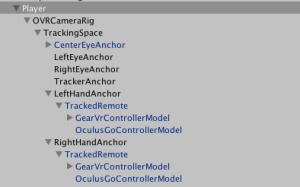

A folder named Oculus will be created in your project. Oculus/Prefabs contains the OVRCameraRig and TrackedRemote prefabs that we will use to implement controller support. If you expand TrackedRemote in the project view, you’ll see that it contains prefabs for the Gear VR and Oculus Go controllers.

If you get the error message “‘OVRP_1_15_0’ does not exist in the current context,” open Assets/Oculus/VR/Scripts/OVRPlugin.cs and add #if !OVRPLUGIN_UNSUPPORTED_PLATFORM and #endif around code that checks the OVRPlugin version. You can find a fixed copy of OVRPlugin.cs in the included source. This error probably does not occur on Windows.

We will also need the VR Samples package from the Unity Asset Store. This package includes a number of sample scenes as well as prefabs and scripts that we will customize to add a laser pointer and reticle. Click on the Asset Store tab in Unity, and search for “VR Samples.” When loaded, click the import button and follow the instructions to import this package. The folders VRSampleScenes and VRStandardAssets will be created in your Assets folder.

Preparing Your Headset

If you are using the Gear VR, you will need to activate Developer Mode on your phone to test your app. In your phone settings, go to “About Device” and tap seven times on “Build number.”

You will also need to activate Developer Mode if you are using an Oculus Go. First, you need to create an organization in your Oculus account at https://dashboard.oculus.com/. Then, in the Oculus mobile app, choose the headset you’re using from the Settings menu, select More Settings, and toggle Developer Mode. See https://developer.oculus.com/documentation/mobilesdk/latest/concepts/mobile-device-setup-go/ for details.

Camera and Controller

Let’s start by setting up a VR camera and adding controller support. First, remove the Main Camera object that was created by default. We will be using a custom camera.

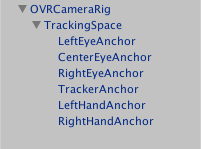

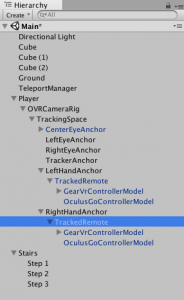

Create an instance of the OVRCameraRig prefab included in Oculus Utilities by dragging Assets/Oculus/VR/Prefabs/OVRCameraRig from the project window to the hierarchy window. Expand OVRCameraRig, and you’ll see that it contains a TrackingSpace with anchors for the eyes and hands. Set the position of OVRCameraRig to (0, 1.8, 0) to approximate the height of a person.

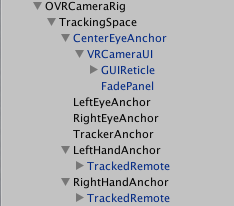

CenterEyeAnchor is the placeholder for our camera that we will replace with the MainCamera prefab included in VR Sample Scenes. Drag Assets/VRSampleScenes/Prefabs/Utils/MainCamera from the project window to the hierarchy. Rename this instance CenterEyeAnchor and nest it under OVRCameraRig -> TrackingSpace. Remove the original CenterEyeAnchor.

Now add the controller using the Assets/Oculus/Prefabs/TrackedRemote prefab. Create instances of TrackedRemote as children of OVRCameraRig->TrackingSpace -> LeftHandAnchor and OVRCameraRig -> TrackingSpace -> RightHandAnchor. TrackedRemote contains models for both the Gear VR controller and the Oculus Go controller. When you run this scene, the correct controller model is displayed depending on which headset you’re using.

Make sure the Controller field of the TrackedRemote instance under LeftHandAnchor is set to “L Tracked Remote.” The instance under RightHandAnchor should be set to “R Tracked Remote.” You can do this in the Unity inspector.

If both hands are set correctly, the controller will automatically be displayed for the correct hand when you run the scene.

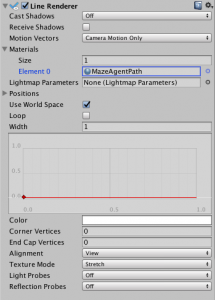

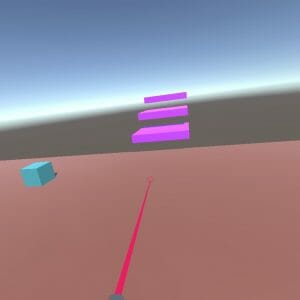

Next, add a laser beam that extends from the controller. Add a Line Renderer to CenterEyeAnchor by clicking “Add Component” in the inspector and typing “Line Renderer” in the search box. Disable “Receive Shadows” and set the material to MazeAgentPath, a red material included with the VR Sample Scenes package. If you want a different colored laser, you can easily create a different colored material and use it in the Line Renderer.

Under the Width field, drag the slider down to set a width of about (0.0, 0.02). If the width is too thick, your laser beam will look like a huge block instead of a beam.

Coding a Laser Beam

Our next step will turn the controller into a laser pointer. To do this, we modify the scripts attached to CenterEyeAnchor and VRCameraUI using the steps described in https://developer.oculus.com/blog/adding-gear-vr-controller-support-to-the-unity-vr-samples/. The modified scripts are included in the source code package that comes with this tutorial.

The script Assets/VRStandardAssets/Scripts/VREyeRaycaster.cs is attached to CenterEyeAnchor. Add these fields to the VREyeRaycaster class:

[SerializeField] private LineRenderer m_LineRenderer = null; public bool ShowLineRenderer = true;

Add the following accessors to VREyeRaycaster:

/* Accessors for controller */

public bool ControllerIsConnected {

get {

OVRInput.Controller controller =OVRInput.GetConnectedControllers () & (OVRInput.Controller.LTrackedRemote | OVRInput.Controller.RTrackedRemote);

return controller == OVRInput.Controller.LTrackedRemote || controller == OVRInput.Controller.RTrackedRemote;

}

}

public OVRInput.Controller Controller {

get {

OVRInput.Controller controller = OVRInput.GetConnectedControllers ();

if ((controller & OVRInput.Controller.LTrackedRemote) == OVRInput.Controller.LTrackedRemote) {

return OVRInput.Controller.LTrackedRemote;

} else if ((controller & OVRInput.Controller.RTrackedRemote) == OVRInput.Controller.RTrackedRemote) {

return OVRInput.Controller.RTrackedRemote;

}

return OVRInput.GetActiveController ();

}

}Controller determines which controller is connected, and ControllerIsConnected determines if a controller is connected.

Now look for the EyeRaycast function and find the line:

RaycastHit hit;

After this line, add:

Vector3 worldStartPoint = Vector3.zero;

Vector3 worldEndPoint = Vector3.zero;

if (m_LineRenderer !=null) {

m_LineRenderer.enabled = ControllerIsConnected && ShowLineRenderer;

}

This code enables the line renderer, making the laser beam visible, only if a controller is connected.

Add more code after this to convert the controller’s local position and rotation to world coordinates:

if (ControllerIsConnected && m_TrackingSpace != null) {

Matrix4x4 localToWorld = m_TrackingSpace.localToWorldMatrix;

Quaternion orientation = OVRInput.GetLocalControllerRotation (Controller);

Vector3 localStartPoint = OVRInput.GetLocalControllerPosition (Controller);

Vector3 localEndPoint = localStartPoint + ((orientation * Vector3.forward) * 500.0f);

worldStartPoint = localToWorld.MultiplyPoint(localStartPoint);

worldEndPoint = localToWorld.MultiplyPoint(localEndPoint);

// Create new ray

ray = new Ray(worldStartPoint, worldEndPoint - worldStartPoint);

}Now find the line:

VRInteractiveItem interactible = hit.collider.GetComponent<VRInteractiveItem>();

and add the following code after it:

if (interactible) {

worldEndPoint = hit.point;

}This sets the end point of the laser beam to the position of any VRInteractiveItem it hits.

At the end of the EyeRaycast function, add:

if (ControllerIsConnected && m_LineRenderer != null) {

m_LineRenderer.SetPosition (0, worldStartPoint);

m_LineRenderer.SetPosition (1, worldEndPoint);

}

to make the endpoints of the laser beam the same as the endpoints of the ray we’re casting.

Reticle

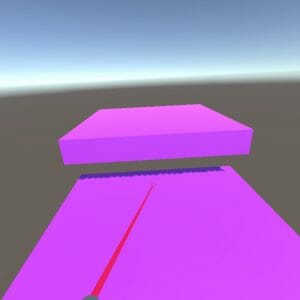

The end of the laser beam will have a reticle, or a targeting circle. The MainCamera prefab already includes a reticle that is displayed in front of the camera. We’re going to change its code so that the reticle is automatically displayed at the end of the laser beam if a controller is connected, but displayed in front of the camera if there is no controller.

In Assets/VRSampleScenes/Scripts/Utils/Reticle.cs, find the SetPosition() function. Change this function so that it takes two arguments, a position and a direction:

public void SetPosition (Vector3 position, Vector3 forward) {

// Set the position of the reticle to the default distance in front of the camera.

m_ReticleTransform.position = position + forward * m_DefaultDistance;

// Set the scale based on the original and the distance from the camera.

m_ReticleTransform.localScale = m_OriginalScale * m_DefaultDistance;

// The rotation should just be the default.

m_ReticleTransform.localRotation = m_OriginalRotation;

}

We’ll see a compilation error about SetPosition() until we add the arguments ray.origin and ray.direction to m_Reticle.SetPosition at the end of the VREyeRayCaster.EyeRaycast function:

if (m_Reticle)

m_Reticle.SetPosition (ray.origin, ray.direction);To pull everything together in Unity, make sure VREyeRayCaster’s fields in the inspector are filled. You will need to manually add CenterEyeAnchor to the “Line Renderer” field, which populates m_LineRenderer, and TrackingSpace to the “Tracking Space” field.

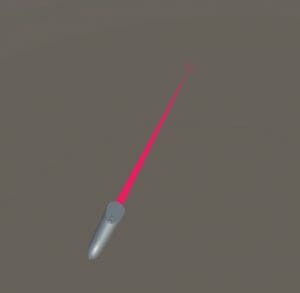

If you run the scene in a headset, the reticle will appear at the end of the laser beam that extends from the controller.

Teleport Manager and Player

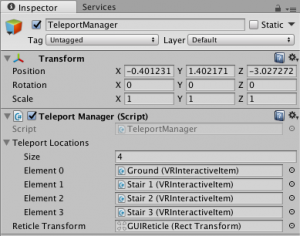

It’s finally time to implement the scripts for teleportation. Create an empty GameObject named TeleportManager. Create a new script, Assets/Scripts/TeleportManager.cs, and attach it to the TeleportManager GameObject.

Open TeleportManager.cs and create the class:

using System;

using UnityEngine;

using VRStandardAssets.Utils;

public class TeleportManager : MonoBehaviour {

public static event Action<Transform> DoTeleport;

[SerializeField] VRInteractiveItem[] teleportLocations;

[SerializeField] Transform reticleTransform;

}

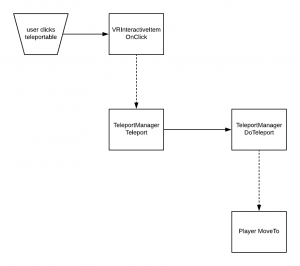

The VRInteractiveItem component, defined in Assets/VRStandardAssets/Scripts/VRInteractiveItem.cs, allows you to interact with objects in VR. VRInteractiveItem provides events that are activated when different types of interactions occur. We will be using the OnClick event.

teleportLocations is an array of VRInteractiveItems that, when clicked, cause the user to teleport to the location of the reticle. We will populate teleportLocations from the inspector.

When the reticle targets a teleport location, and the controller is clicked, the player will be teleported to the position of reticleTransform .

The actual teleporting will be done by the Player object, which we will create later. Player will contain a method that subscribes to TeleportManager.DoTeleport. DoTeleport is an event like VRInteractiveItem.OnClick .

Within TeleportManager, add the following methods:

void OnEnable() {

foreach (VRInteractiveItem t in teleportLocations) {

t.OnClick += Teleport;

}

}

void OnDisable() {

foreach (VRInteractiveItem t in teleportLocations) {

t.OnClick -= Teleport;

}

}

void Teleport() {

if (DoTeleport != null) {

DoTeleport(reticleTransform);

} else {

Debug.Log("DoTeleport event has no subscribers.");

}

}

OnEnable is called automatically when the TeleportManager is created. In OnEnable, the Teleport method subscribes to the OnClick event of each teleport location. TeleportManager.Teleport is run whenever a teleport location is clicked. OnDisable unsubscribes Teleport when the TeleportManager object is destroyed. Unsubscribing prevents memory leaks.

The Teleport method calls the DoTeleport action, which causes subscribing methods to run.

Now we’ll create a wrapper object for the player. Create an empty GameObject named Player, and attach a new script, Assets/Scripts/Player.cs. Nest OVRCameraRig under Player because the camera is the point of view of the player. Set Player’s position to (0, 1.8, 0), and reset OVRCameraRig’s position to (0, 0, 0). OVRCameraRig’s position is now relative to Player.

Add the code for Player.cs:

using System;

using System.Collections;

using System.Collections.Generic;

using UnityEngine;

public class Player : MonoBehaviour {

Vector3 playerPosition; // Keeps track of where Player will be teleported.

[SerializeField] float playerHeight = 1.8f;

void OnEnable() {

TeleportManager.DoTeleport += MoveTo;

}

void OnDisable() {

TeleportManager.DoTeleport -= MoveTo;

}

void MoveTo(Transform destTransform) {

// Set the new position.

playerPosition = destTransform.position;

// Player's eye level should be playerHeight above the new position.

playerPosition.y += playerHeight;

// Move Player.

transform.position = playerPosition;

}

}

playerPosition is a placeholder for the position where the player will be teleported. playerHeight exists because we need to take the player’s eye level into consideration when we teleport.

In OnEnable , the Player.MoveTo method is subscribed to the TeleportManager.DoTeleport event. The unsubscription occurs in OnDisable.

Player.MoveTo does the work of teleporting a player. We add playerHeight to the y coordinate of the destination Transform’s position to account for eye level. Then we move Player by updating the position of its Transform to the new destination.

Creating Teleport Locations

Our last step will be creating teleport locations.

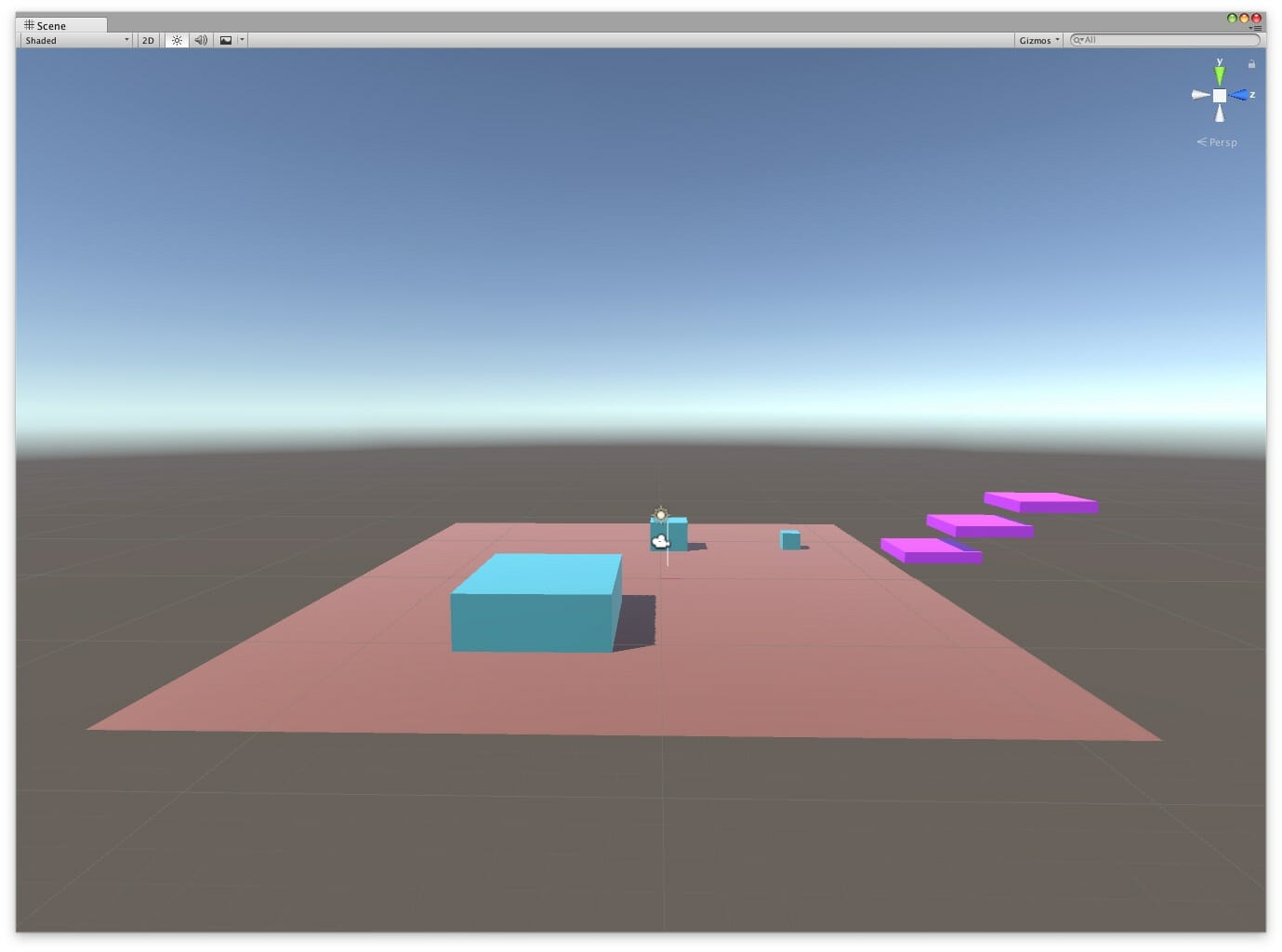

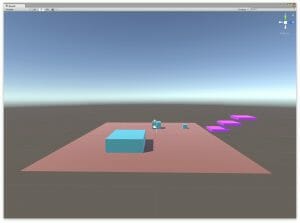

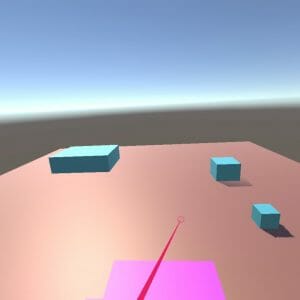

In the Unity hierarchy, create a plane named “Ground” and position it at the origin. Enlarge Ground and attach VRStandardAssets/Scripts/VRInteractiveItem.cs. Create a couple of cubes located on the ground to serve as waypoints. As we teleport on the ground, these waypoints will help us tell where we are.

Now create some thinner cubes and form them into the steps of a staircase. Nest the stairs in an empty GameObject named Stairs, and attach VRInteractiveItem.cs to each stair.

Finally, to pull everything together, we will populate the fields of TeleportManager. In the inspector, open the “Teleport Locations” field, and set the size equal to the number of VRInteractiveItems. Fill in the elements by dragging Ground and each of the stairs from the hierarchy window to the fields in the inspector. Fill in the “Reticle Transform” field with GUIReticle.

Building the Project

Now we’re ready to test the app. Plug in your Oculus Go headset or your Samsung phone, and select “Build and Run” from the File menu. If everything works, you will be able to teleport to any location on the ground or on the stairs by pointing the controller and clicking the trigger button. You have now successfully implemented teleporting in VR!

CREDIT: Featured Image from Photo by Martin Sanchez on Unsplash